Project

Our team's goal was to create an assistive technology app for hard-of-hearing users using the newly released Magic Leap One headset.

Ian using the Magic Leap Headset

Challenges

Build a demo that showcased the potential of Magic Leap One as an assistive technology device

Create a real-world intervention using Unity that would be helpful to hard-of-hearing individuals

Use the current hardware and software of the device to its greatest effect

Additionally, we had to work around issues we encountered with the device

Brainstorming and Scenario-Building

Role

Inspired by a friend's story, I pitched the concept of using Magic Leap One as an assistive technology device at a VR hackathon. As a UX designer and Unity technologist, I worked on the project, liaising with Magic Leap employees to learn about its possibilities and limitations.

Process

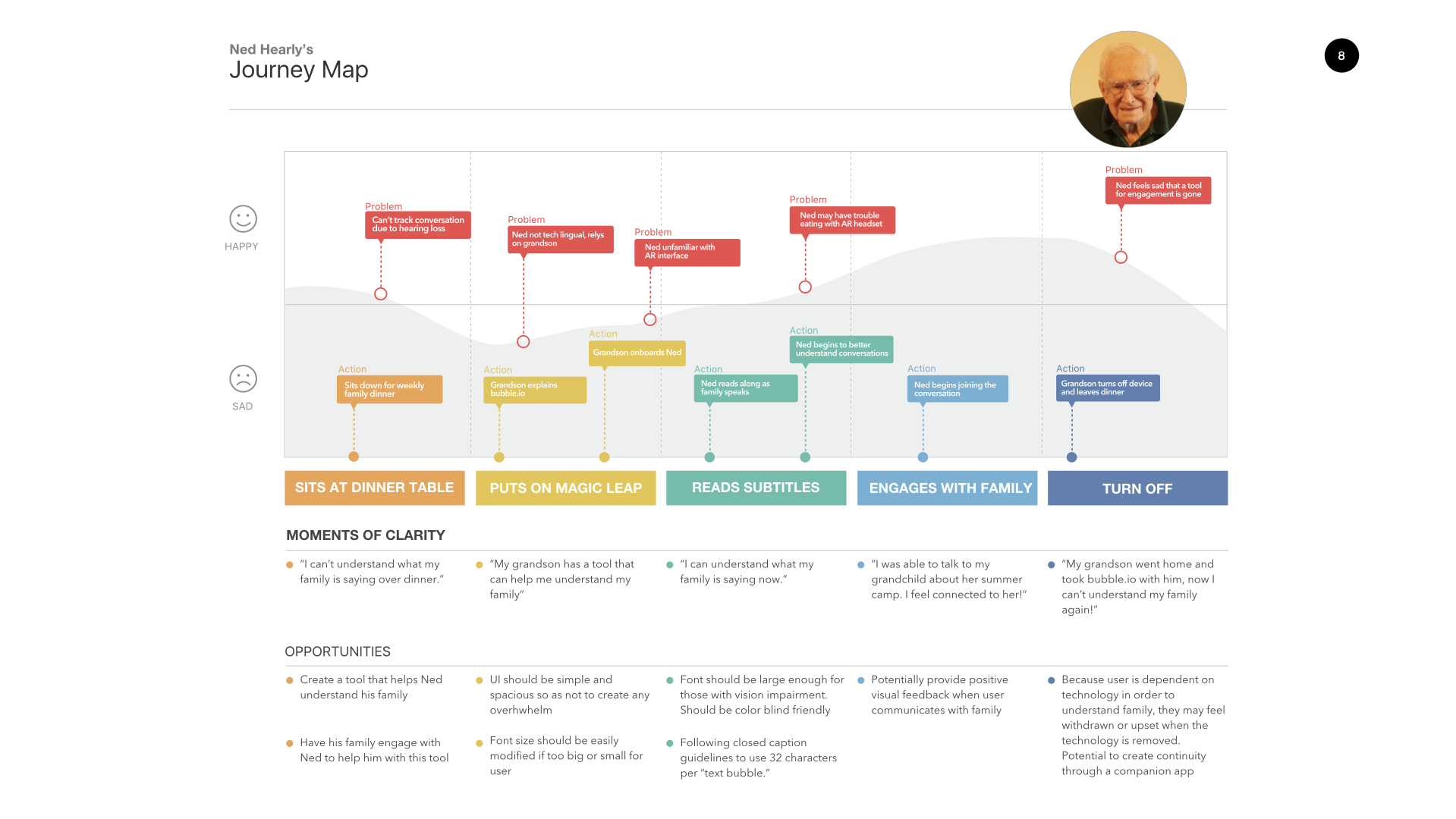

Our team of three designers began by researching and building a "wizard of oz" prototype to be used with the Magic Leap One augmented reality headset. Since the headset had just been released, we started by exploring the device to learn its affordances and what had already been done with it.

We brainstormed ideas for how to use the new device as an assistive technology, drawing from stories shared by our families and friends. We initially focused on subtitles or speech bubbles for reality.

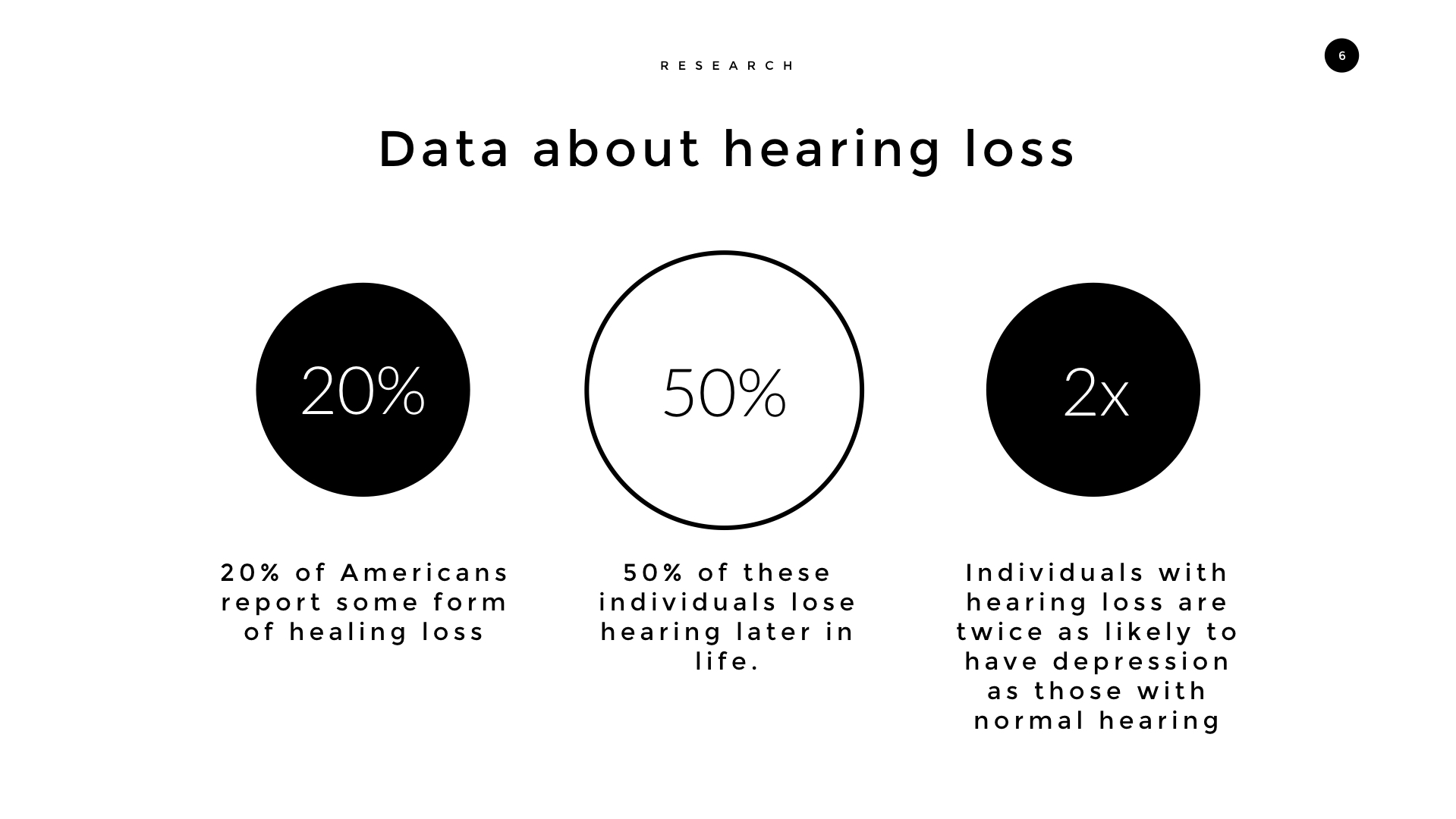

Through research, we learned about the prevalence and profound effects of hearing loss on a sense of belonging, which can contribute to depression, anxiety, and paranoia.

Whiteboarding the Problem

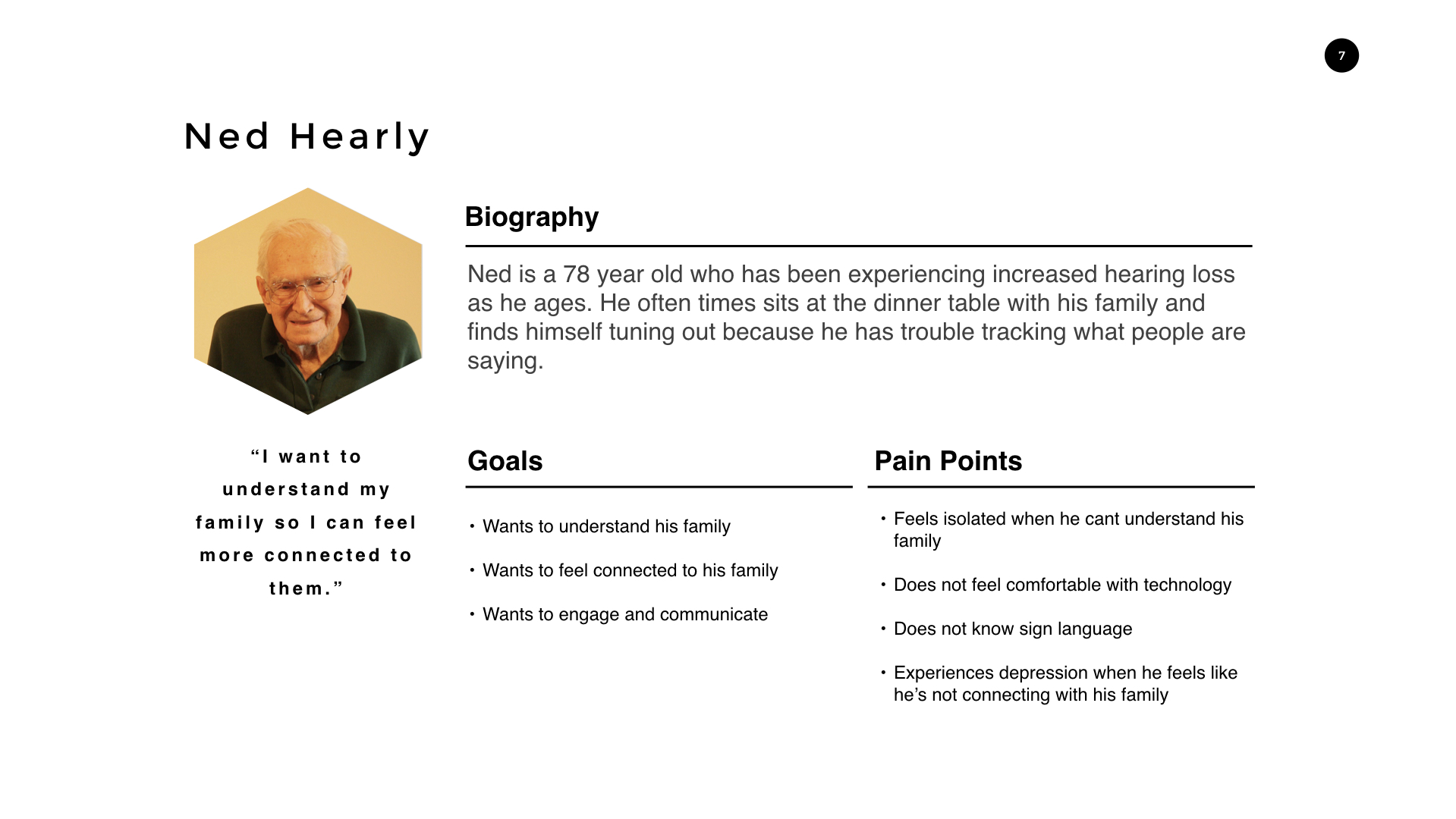

We then sketched, whiteboarded, and role-played scenarios, focusing on a specific scenario shared by one team member about their grandfather who had trouble hearing and feeling connected during family dinner conversations. We wanted to find ways to help him feel more engaged.

We encountered challenges while working on the project, primarily because we had limited access to the headset. However, we persisted, and one team member had the idea to gather information for our presentation and portfolios. Later, we were given a headset dedicated to our full-time use, which significantly improved our situation.

Initially, we planned to set up a simple speech-to-text algorithm to fill the speech bubbles. However, we encountered a snag because the internal microphone was intended to capture audio from the person using the device, not someone across the table in a noisy room. We developed a workaround by running the device in a "live" mode, which allowed us to use the resources of the attached computer and plug in a shotgun mic to capture audio.

I designed 3D objects of various sizes to serve as backgrounds for our speech bubbles using Autodesk's Fusion 360. Our goal was to have the system swap out the speech bubbles depending on the length of the text being spoken.

Despite setbacks and technical difficulties, we eventually changed our goal to performing a scene where two team members posed as family members, and the demo participant was the third person at the family dinner table. The final product was a "wizard of oz" demo, named because the person behind the curtain was making things happen.

We wrote a script and set up stationary virtual objects that would be shown and hidden one after the other. The Magic Leap One can scan the room to have objects interact with walls and tables. In our case, this enabled us to lock the speech bubbles to a specific place floating above the table, which helped us pull off our scenario.

Learnings & Future

With great power comes great responsibility: As designers, we need to be aware of the potential for manipulation and avoid influencing or manipulating users to do something that might harm them.

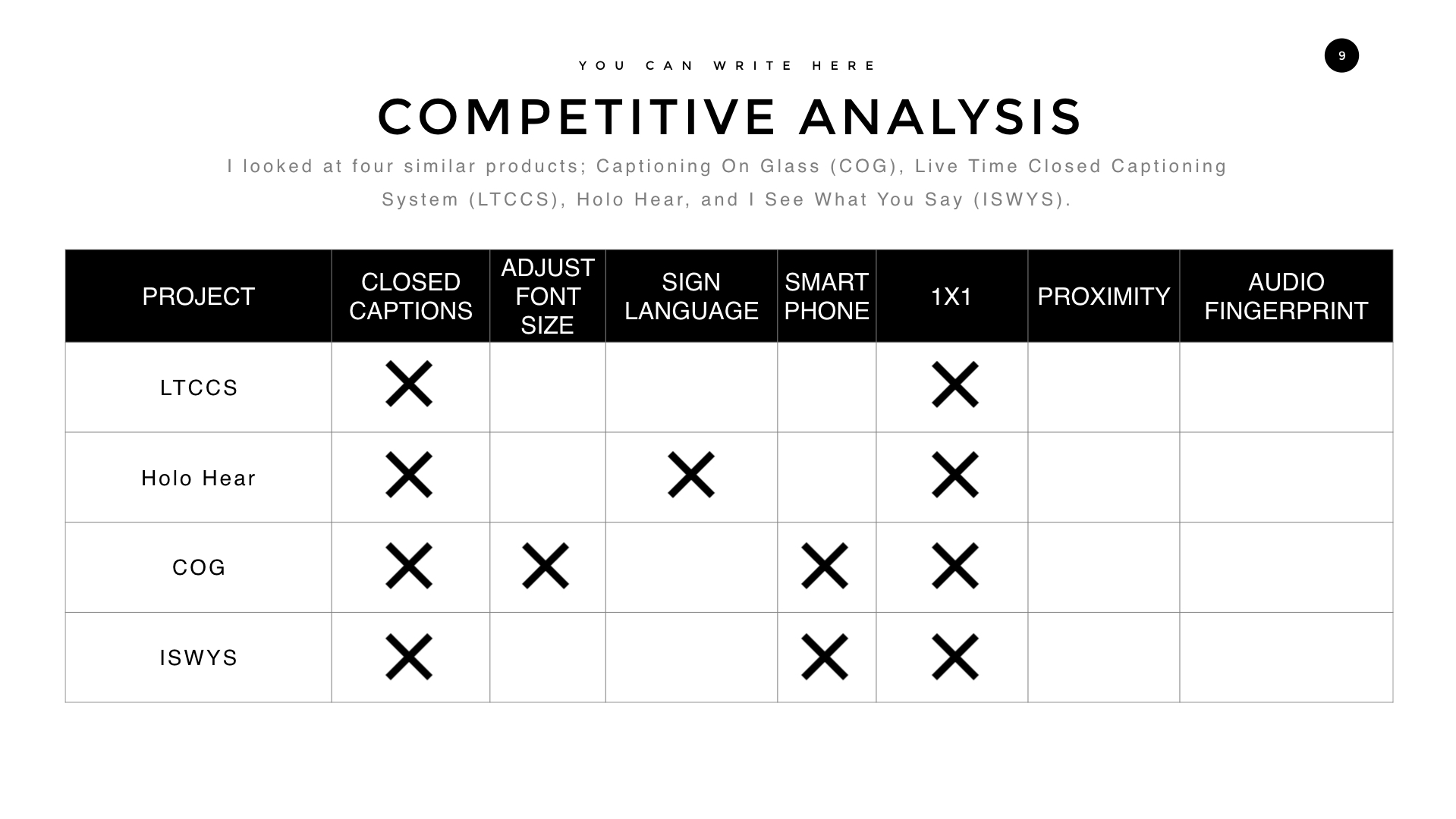

Speech to Text: We found many options for speech to text processing software and libraries. Our next steps in making the project functional would involve trying many of these to see what types of output we would get with each, how fast each would be, and if the results of this testing influenced our ideas and design. An MVP version of the app might merely show text at the bottom of the display as soon as it was recognized, ie, closed captioning for reality.

Who’s Talking & What Are They Saying? We had multiple ideas for ways of gaining audio input. The simpler is to have multiple clip-on Bluetooth mics that the app would receive audio input from. This would make it trivial to split up the audio streams into different parts of the conversation. A more ambitious idea would be to use multiple microphones and machine learning to figure out where the different voices were coming from.

Multiple Languages: The app would need to be able to handle multiple languages. This would involve figuring out how to recognize speech in different languages, as well as how to translate them in real-time. This could potentially involve partnering with existing translation services, or developing our own translation algorithm.

Would People Wear That? The Magic Leap headset is itself a bit of a prototype for our scenario. It’s far more wearable, portable, and more of a consumer product than most AR and VR devices. However, it’s unlikely someone would wear this headset for every meal with their family. It’s still bulky, and one very important consideration is that you can’t see the wearer’s eyes behind the shaded glass. If they’re not wearing it all of the time then, they’re unlikely to wear it when they’re in their house not hearing someone asking a question. In order to be the type of thing that someone would wear all of the time we’re going to have to get down to the thick sunglasses frames size, and for our scenario have microphones arrayed around the frames in order to pick up this kind of audio.

Outcome

The final product was a proof of concept wizard of oz experiment in which we asked our participants to experience the demo as if they were hard of hearing. We shared our research about hearing loss and the problems that it can cause and then let each person know that they were going to be playing the part of someone who was hard of hearing, and were given a pair of earplugs to wear. Nicole and Philip spoke quietly to further the effect, with the result that the participants were unable to hear what they said.

The participant was now sitting at the head of the table not being able to hear the first pieces of the conversation about trying to remember a particular ingredient in grandma’s chili. The user was then handed the Magic Leap headset by Philip as a potential way to include this family member in the conversation. We used the wizard of oz prototyping method: after each line was spoken, we would press a button to progress through a series of speech bubbles that matched the pre-set conversation. The person experiencing the demo couldn’t hear what was being said, but they could see speech bubbles showing up in between our conversationalists, and could turn back and forth to read the conversation. The goal was to have them participate in the conversation, so they were asked a question. Their act of answering helped them be re-connected and participate in the family dinner table conversation.

We received the Award for Outstanding Novelty! All of the judges could see the promise and possibilities of the idea and all wanted something like this project to be made real. We had great conversations with each of the judges, including their feedback, anecdotes about family members that would be helped by this, and suggestions for what features they would want to see.

One of the key moments of a large number of the demo sessions was “oh, they’re going to show me what is being said!” The best response from one of the judges was to clap giddily!

Short video of what a demo participant sees through the Magic Leap One headset device